Virtualization, Cloud, Infrastructure and all that stuff in-between

My ramblings on the stuff that holds it all together

New Home Lab Design

I have had a lab/test setup at home for over 15 years now, it’s proven invaluable to keep my skills up to date and help me with study towards the various certifications I’ve had to pass for work, plus I’m a geek at heart and I love this stuff 🙂

over the years it’s grown from a BNC based 10mbit LAN running Netware 3/Win 3.x, through Netware 4/NT4, Slackware Linux and all variants of Windows 200x/RedHat.

Around 2000 I started to make heavy use of VMware Workstation to reduce the amount of hardware I had (8 PCs in various states of disrepair to 2 or 3 homebrew PCs) in latter years there has been an array of cheap server kit on eBay and last time we moved house I consolidated all the ageing hardware into a bargain eBay find – a single Compaq ML570G1 (Quad CPU/12Gb RAM and an external HDD array) which served fine until I realised just how much our home electricity bills were becoming!

Note the best practice location of my suburban data centre, beer-fridge providing hot-hot aisle heating, pressure washer conveniently located to provide fine-mist fire suppression; oh and plenty of polystyrene packing to stop me accidentally nudging things with my car. 🙂

I’ve been using a pair of HP D530 SFF desktops to run ESX 3.5 for the last year and they have performed excellently (links here here and here) but I need more power and the ability to run 64 bit VMs (D530’s are 32-bit only) I also need to start work on vSphere which unfortunately doesn’t look like it will run on a D530.

So I a acquired a 2nd-hand ML110 G4 and added 8Gb RAM – this has served as my vSphere test lab to-date, but I now want to add a 2nd vSphere node and use DRS/HA etc. (looks like no FT for me unfortunately though) – Techhead put me onto a deal that Servers Plus are currently running so I now have 2 x ML110 servers 🙂 they are also doing quad-core AMD boxes for even less money here – see Techhead for details of how to get free delivery here

In the past my labs have grown rather organically as I’ve acquired hardware or components have failed; being as this time round I’ve had to spend a fair bit of my own money buying items I thought it would be a good idea to design it properly from the outset 🙂

The design goals are:

- ESX 3.5 cluster with DRS/HA to support VM 3.5 work

- vSphere DRS/HA cluster to support future work and more advanced beta testing

- Ability to run 64-bit VMs (for Exchange 2007)

- Windows 2008 domain services

- Use clustering to allow individual physical hosts to be rebuilt temporarily for things like Hyper-V or P2V/V2P testing

- Support a separate WAN DMZ and my wireless network

- Support VLAN tagging

- Adopt best-practice for VLAN isolation for vMotion, Storage etc. as far as practical

- VMware Update manager for testing

- keep ESX 3/4 clusters seperate

- Resource pool for “production” home services – MP3/photo library etc.

- Resource pool for test/lab services (Windows/Linux VMs etc.)

- iSCSI SAN (OpenFiler as a VM) to allow clustering, and have all VMs run over iSCSI.

The design challenges are:

- this has to live in my garage rack

- I need to limit the overall number of hosts to the bare minimum

- budget is very limited

- make heavy re-use of existing hardware

- Cheap Netgear switch with only basic VLAN support and no budget to buy a decent Cisco.

Luckily I’m looking to start from scratch in terms of my VM-estate (30+) most of them are test machines or something that I want to build separately, data has been archived off so I can start with a clean slate.

The 1st pass at my design for the ESX 3.5 cluster looks like the following

I had some problems with the iSCSI VLAN, and after several days of head scratching I figured out why; in my network the various VLANs aren’t routable (my switch doesn’t do Layer 3 routing). For iSCSI to work the service console needs to be accessible from the iSCSI VKernel port. In my case I resolved this by adding an extra service console on the iSCSI VLAN to get round this problem and discovery worked fine immediately

I also need to make sure the Netgear switch had the relevant ports set to T (Tag egress mode) for the VLAN mapping to work – there isn’t much documentation on this on the web but this is how you get it to work.

The vSwitch configuration looks like the following – note these boxes only have a single GbE NIC, so all traffic passes over them – not ideal but performance is acceptable.

iSCSI SAN – OpenFiler

In this instance I have implemented 2 OpenFiler VMs, one on each D530 machine, each presenting a single 200Gb LUN which is mapped to both hosts

Techhead has a good step-by-step how to setup an OpenFiler here that you should check out if you want to know how to setup the volumes etc.

I made sure I set the target name in Openfiler to match the LUN and filer name so it’s not too confusing in the iSCSI setup – as shown below;

if it helps my target naming convention was vm-filer-X-lun-X which means I can have multiple filers, presenting multiple targets with a sensible naming convention – the target name is only visible within iSCSI communications but does need to be unique if you will be integrating with real-world stuff.

Storage Adapters view from an ESX host – it doesn’t know the iSCSI target is a VM that it is running 🙂

Because I have a non routed L3 network my storage is all hidden in the 103 VLAN, to administer my OpenFiler I have to use a browser in a VM connected to the storage VLAN, I did play around with multi-homing my OpenFilers but didn’t have much success getting iSCSI to play nicely, it’s not too much of a pain to do it this way and I’m sure my storage is isolated to a specific VLAN.

The 3.5 cluster will run my general VMs like Windows domain controllers, file servers and my SSL VPN, they will vMotion between the nodes perfectly. HA won’t really work as the back-end storage for the VM’s live inside an OpenFiler, which is a VM – but it suits my needs and storage vMotion makes online maintenance possible with some advanced planning.

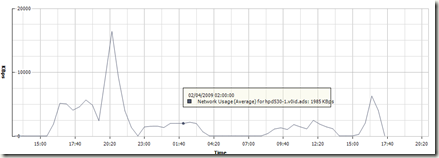

Performance from VM’d OpenFilers has been pretty good and I’m planning to run as many as possible of my VMs on iSCSI – the vSphere cluster running on the ML110’s will likley use the OpenFilers as their SAN storage.

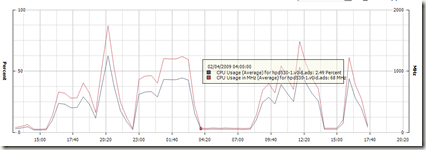

This is the CPU chart from one of the D530 nodes in the last 32hrs whilst I’ve been doing some serious storage vMotion between the OpenFiler VM’s it hosts.

That’s it for now, I’m going to build out the vSphere side of the lab shortly on the ML110’s and will post what I can (subject to NDA, although GA looks to be close)

Great post, wonderfull lab. It’s a shame that the Openfile runs in a VM (or choice to save on electricity bill?…-:) ).

I tried to run ESX 3.5 on one physical box, and connect Openfiler running in a VM from another physical box. I have done this lab just for testing Openfiler Setup. It was my first time I setup Openfiler. I have done a detailed step by step about it here:

http://www.vladan.fr/how-to-configure-openfiler-iscsi-storage-for-use-with-vmware-esx/

It’s for a real beginners, with a schema.

for your routing needs, you should look at the opensource vyatta solution as it can run in vm.

i am planning my lab env now, as a citrix consultant. i am planning to implement 4 hosts, 2 citrix/xen hosts and 2 microsoft/hyperv hosts.

looking at hardware to implement as i have very limited space in my townhouse as well.

stay in touch at twitter.com/chriscuratolo

What is the model of the basic netgear network switch that you bought? What would be your decent recommended switch? I am buying components for my lab, so your inputs will help. Thanks in advance.

What is the netgear switch that you bought with the bais vlan support? Also, what is decent cisco model that you would have liked and why? I am building a home lab and your inputs will help me buy the right gear. Thanks a bunch.

Boger.

I have two openfiler virtual appliances; one on each of my esx hosts. If i want to reboot one of my esx hosts I need to power down the openfiler on that host. This causes the esx host to hang while unmounting the filesystem from the downed openfiler VM as the iSCSI storage is no longer available to ESX. Is there a way to script the unload of the filesystem when shutting down the openfiler VM and mount it again when it starts up again?

Pingback: Internal Layout « The GeekCabin Build Blog

I would like to build my lab in a similar fashion. Can you please post the model number of the switch that you used in your lab? Thanks for all of the information.

The switch I am using is a Netgear GS748T; but to be honest there are cheaper and quieter options – keep an eye on the blog; there will be a series of joint postings with http://www.techhead.co.uk on the current lab setups with all the full tech details and where to buy etc.

Hi there, just wanted to know if you could provide some more detail on how you got ESX working with VLANS on the GS748T switch. We have two switches setup as trunk back to router, but when we set the ports for ESX Server as tagged we cant communicate. Only works when they are untagged.

Any support would be great.

Thanks

Nick – I’ve got mine set to ‘Tag Egress’ packets for the relevant VLAN using 802.1q VLAN on the switch port and it works ok – the UI is a bit odd, but you need to make sure you select the correct VLAN in the drop down /then/ set the T/U setting for the relevant switch port and make sure the PVID isn’t set on those ports.

but my ESX hosts are happily accepting multiple VLANs up and down their ‘T’ ports but by design my OpenFiler boxes are only available on my storage VLAN and are plugged into ports with Port based VLAN’s set (PVID) and I had to set those ports to ‘U’ (Untag egress packets) to get it to work.

I’ve not managed to get multiple trunked NICs into a single switch from an ESX host to work reliably on this switch – they don’t failover when a NIC is unplugged – so maybe it’s something to do with that – these aren’t the cleverest switches when it comes to the trunking configuration/features

I will try to do a blog post with some screenshots of my switch config in the next couple of days, but I’ve only got a single switch so I can’t try out your configuration unfortunately.

I have the chace to buy 4 ML110 G4 very cheap and have found a couple of posts on the internet claiming that they will not support 64 bit guest OS. I noticed that you are using two ML110 G4, so I just wanted to ask you if you can confirm that they do NOT support 64 bit guest OS?

Best regards

Martin

Mine are running vSphere 4 and can run x64 guest’s – you prob need to check which CPU’s they have – mine are the Intel Xeon ones, I’ve seen a fair few cheap ones around, but they are the non-Xeon chips (AMD/P4 etc.) – which maybe don’t do x64 I’ve not had one so I don’t know specifically I’m afraid.

You won’t be able to do FT on those ML110’s either, if thats a concern, the quad-core AMD ML115 G5 is the current weapon of choice

Ok, thanks for the responce. I checked the CPUs just now and it’s Intel Xeon 3040 in all 4 of them. That looks promising, thanks for the help!

Great – my G4 has a 3040 CPU and it runs vSphere and x64 guests fine; no FT support – but works perfectly for me otherwise.

Excellent, that will be enough for me! Thanks for the help 🙂

Pingback: Welcome to vSphere-land! » Home Lab Links

hi there, will this hardware adequate to run vsphere and 64bit vms using vmware workstation on a single physical host?

the D530 in this (old) post doesn’t support x64 VMs (or physical OS installations either)

You’d be best to check out my vTARDIS project for that requirement – https://vinf.net/vTARDIS