Virtualization, Cloud, Infrastructure and all that stuff in-between

My ramblings on the stuff that holds it all together

Category Archives: Work

New Home Lab Design

I have had a lab/test setup at home for over 15 years now, it’s proven invaluable to keep my skills up to date and help me with study towards the various certifications I’ve had to pass for work, plus I’m a geek at heart and I love this stuff 🙂

over the years it’s grown from a BNC based 10mbit LAN running Netware 3/Win 3.x, through Netware 4/NT4, Slackware Linux and all variants of Windows 200x/RedHat.

Around 2000 I started to make heavy use of VMware Workstation to reduce the amount of hardware I had (8 PCs in various states of disrepair to 2 or 3 homebrew PCs) in latter years there has been an array of cheap server kit on eBay and last time we moved house I consolidated all the ageing hardware into a bargain eBay find – a single Compaq ML570G1 (Quad CPU/12Gb RAM and an external HDD array) which served fine until I realised just how much our home electricity bills were becoming!

Note the best practice location of my suburban data centre, beer-fridge providing hot-hot aisle heating, pressure washer conveniently located to provide fine-mist fire suppression; oh and plenty of polystyrene packing to stop me accidentally nudging things with my car. 🙂

I’ve been using a pair of HP D530 SFF desktops to run ESX 3.5 for the last year and they have performed excellently (links here here and here) but I need more power and the ability to run 64 bit VMs (D530’s are 32-bit only) I also need to start work on vSphere which unfortunately doesn’t look like it will run on a D530.

So I a acquired a 2nd-hand ML110 G4 and added 8Gb RAM – this has served as my vSphere test lab to-date, but I now want to add a 2nd vSphere node and use DRS/HA etc. (looks like no FT for me unfortunately though) – Techhead put me onto a deal that Servers Plus are currently running so I now have 2 x ML110 servers 🙂 they are also doing quad-core AMD boxes for even less money here – see Techhead for details of how to get free delivery here

In the past my labs have grown rather organically as I’ve acquired hardware or components have failed; being as this time round I’ve had to spend a fair bit of my own money buying items I thought it would be a good idea to design it properly from the outset 🙂

The design goals are:

- ESX 3.5 cluster with DRS/HA to support VM 3.5 work

- vSphere DRS/HA cluster to support future work and more advanced beta testing

- Ability to run 64-bit VMs (for Exchange 2007)

- Windows 2008 domain services

- Use clustering to allow individual physical hosts to be rebuilt temporarily for things like Hyper-V or P2V/V2P testing

- Support a separate WAN DMZ and my wireless network

- Support VLAN tagging

- Adopt best-practice for VLAN isolation for vMotion, Storage etc. as far as practical

- VMware Update manager for testing

- keep ESX 3/4 clusters seperate

- Resource pool for “production” home services – MP3/photo library etc.

- Resource pool for test/lab services (Windows/Linux VMs etc.)

- iSCSI SAN (OpenFiler as a VM) to allow clustering, and have all VMs run over iSCSI.

The design challenges are:

- this has to live in my garage rack

- I need to limit the overall number of hosts to the bare minimum

- budget is very limited

- make heavy re-use of existing hardware

- Cheap Netgear switch with only basic VLAN support and no budget to buy a decent Cisco.

Luckily I’m looking to start from scratch in terms of my VM-estate (30+) most of them are test machines or something that I want to build separately, data has been archived off so I can start with a clean slate.

The 1st pass at my design for the ESX 3.5 cluster looks like the following

I had some problems with the iSCSI VLAN, and after several days of head scratching I figured out why; in my network the various VLANs aren’t routable (my switch doesn’t do Layer 3 routing). For iSCSI to work the service console needs to be accessible from the iSCSI VKernel port. In my case I resolved this by adding an extra service console on the iSCSI VLAN to get round this problem and discovery worked fine immediately

I also need to make sure the Netgear switch had the relevant ports set to T (Tag egress mode) for the VLAN mapping to work – there isn’t much documentation on this on the web but this is how you get it to work.

The vSwitch configuration looks like the following – note these boxes only have a single GbE NIC, so all traffic passes over them – not ideal but performance is acceptable.

iSCSI SAN – OpenFiler

In this instance I have implemented 2 OpenFiler VMs, one on each D530 machine, each presenting a single 200Gb LUN which is mapped to both hosts

Techhead has a good step-by-step how to setup an OpenFiler here that you should check out if you want to know how to setup the volumes etc.

I made sure I set the target name in Openfiler to match the LUN and filer name so it’s not too confusing in the iSCSI setup – as shown below;

if it helps my target naming convention was vm-filer-X-lun-X which means I can have multiple filers, presenting multiple targets with a sensible naming convention – the target name is only visible within iSCSI communications but does need to be unique if you will be integrating with real-world stuff.

Storage Adapters view from an ESX host – it doesn’t know the iSCSI target is a VM that it is running 🙂

Because I have a non routed L3 network my storage is all hidden in the 103 VLAN, to administer my OpenFiler I have to use a browser in a VM connected to the storage VLAN, I did play around with multi-homing my OpenFilers but didn’t have much success getting iSCSI to play nicely, it’s not too much of a pain to do it this way and I’m sure my storage is isolated to a specific VLAN.

The 3.5 cluster will run my general VMs like Windows domain controllers, file servers and my SSL VPN, they will vMotion between the nodes perfectly. HA won’t really work as the back-end storage for the VM’s live inside an OpenFiler, which is a VM – but it suits my needs and storage vMotion makes online maintenance possible with some advanced planning.

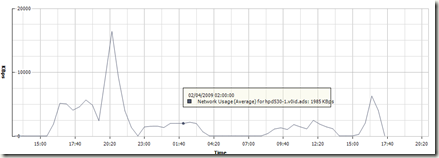

Performance from VM’d OpenFilers has been pretty good and I’m planning to run as many as possible of my VMs on iSCSI – the vSphere cluster running on the ML110’s will likley use the OpenFilers as their SAN storage.

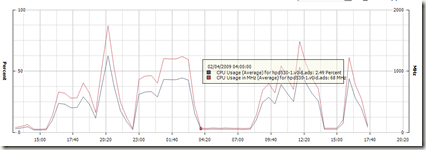

This is the CPU chart from one of the D530 nodes in the last 32hrs whilst I’ve been doing some serious storage vMotion between the OpenFiler VM’s it hosts.

That’s it for now, I’m going to build out the vSphere side of the lab shortly on the ML110’s and will post what I can (subject to NDA, although GA looks to be close)

And so begins 2009..

Ok, well it was last week 🙂 apologies for the lack of postings in the last month which was due to a mix of well-earnt holiday and some very busy periods of work in the December run-down.

Anyways, I would like to wish all vinf.net readers a belated happy new year; I’ve been amazed at how much this blog has grown over the last year, since my last review its now topped 120k hits – and (un)interesting factoid; Thursdays are consistently the most busy day for traffic!

Rest assured I haven’t been idle in the month’s absence from blogging. I have a number of interesting posts in the pipeline, continuing my PlateSpin power convert series (with the new product names/line-up that was announced in the meantime!) and fleshing out my cloud reference architecture, VMWare vCloud, Amazon EC2 and some further work on cheap ESX PC solutions for home/labs.

In other news, VMWare have kindly offered* me a press pass to VMWorld Europe in Feb which I’m honoured to accept and will hopefully be following Scott’s example by blogging extensively before, during and after; although I’ll probably stick to a day by day summary like I did for TechEd last year and break out any specific areas of detailed interest into separate posts so that they get the attention and level of detail required.

I’ve also submitted for a number of presenter sessions so fingers crossed they’ll be accepted.

2009 looks to be a very interesting year for the virtualization industry with increased adoption and considering the current economic climate maybe the VI suite should be renamed the Credit Crunch Suite rather than vSphere as more and more companies consolidate and virtualize to save money 🙂

Cloud computing also looks to be big this year and I’m hoping to be very active in this area, building on the work I did last year taking a more practical/infrastructure position on adoption, hopefully I will have some exciting announcements on this front in the coming months.

*In terms of disclosure, VMWare have offered me a free conference ticket in exchange for my coverage – there is absolutely no stipulation on positive/biased content so I’ll be free as ever to give my opinion, my employer is likely to be covering my travel expenses for the event as I was going to be attending anyway.

Excellent Set of Resources for VMWare HA

Free EMC Celerra for your Home/Lab

Virtualgeek has an interesting post here about a freely downloadable VM version of their Celerra product, including an HA version. This is an excellent idea for testing and lab setups, and a powerful tool in your VM Lab arsenal alongside other offerings like Xtravirt Virtual SAN and OpenFiler.

I’ve been saying for a while that companies that make embedded h/w devices and appliances should try to offer versions of the software running their devices as VM’s so people can get them into lab/test environments quickly, most tech folk would rather download and play with something now, rather than have to book and take delivery of an eval with sales drones (apologies to any readers who work in sales) and pre-sales professional services, evaluation criteria etc. if your product is good it’s going to get recommended, no smoke and mirrors required.

As such VM appliances are an excellent pre-sales/eval tool, rather than stopping people buying products. Heck, they could even licence the VM versions directly for production use (as Zeus do with their ZXTM products); this is a very flexible approach and something that is important if you get into clouds as an internal or external service provider – the more you standardise on commodity hardware with a clever software layer the more you can recycle, reuse and redeploy without being tied into specific vendor hardware etc.

Most “appliances” in-use today are actually low-end PC motherboards with some clever software in a sealed box – for example I really like the Juniper SA range of SSL VPN appliances, I recently helped out with a problem on one which was caused by a failed HDD – if you hook up the console interface its a commodity PC motherboard in a sealed case running a proprietary secure OS – as it’s all intel based, no reason it couldn’t also run as a VM (SLL accelerator h/w can be turned off in the software so there can’t be any hard dependency on any SSL accelerator cards inside the sealed box) – adopting VM’s for these appliances provides the same (maybe even better) level of standard {virtual} hardware that appliance vendors need to make their devices reliable/serviceable.

Another example, the firmware that is embedded in the HP Virtual Connect modules I wrote about a while back runs under VMWare Workstation, HP have an internal use version for engineers to do some development and testing against, sadly they won’t redistribute it as far as I am aware.

PSOD – Purple Screen of Death

Just incase you ever wondered what it looks like here is a screendump..

this is the VMWare equivalent of Microsoft’s BSOD (Blue Screen of Death)

I got this whilst running ESX 3.5 under VMWare Workstation 6.5 build 99530, it happened because I was trying to boot my ESX installation from a SCSI hard disk – which it didn’t like – I assume because of driver support, swapped for an IDE one and it worked fine…

update – actually the VM had 384Mb of RAM allocated and that’s what actually stopped it from booting.. upped to 1024Mb and it runs fine.

Its the first time I’ve seen one – all the production ESX boxes I’ve worked with have always been rock-solid (touch wood)

I’m preparing a blog post about unattended installations of ESX when I hit this, in case you were wondering.

Handy Reference Chart for Microsoft Server Application Licences

Taken from a download on the Microsoft Partner Licencing Specialist site, the following diagram makes for a useful quick reference chart for what licencing options are applicable to the big MS Server apps – far easier than having to check the product sites and documentation individually if you are trying to spec something up.

Also lots more useful information on this site – it’s designed to train people to become Microsoft licencing specialists (MLSS/MLSE) it’s mainly sales staff orientated training, but some useful/easy to digest reference material for techies/consultants alike if you’ve ever struggled to understand Microsoft licencing.

Useful links..

Revision Presentations – .PDF files to download https://partner.microsoft.com/UK/40033119

Training Videos – downloadable http://www.microsoft.com/uk/partner/learningpaths/?id=licensing.mlss

Certified..

I had my 2nd attempt at the VMWare FastTrack course last week (the 1st attempt was cut short due to power problems, and I’ve only just managed to find the time to reschedule).

The course went ok, the 1st 2 days were a bit dull as I’d already sat through them once; the course has been restructured since and is now contiguous through the 2 books, in the 1st attempt we were jumping back and forth as the Fast Track course is the Install & Configure and DSA course mashed together.

I found the pace ok, infact I could probably have been pushed a lot harder, as such I didn’t find it as “intensive or extended hours” – we finished by at least 5.30 most days and earlier some other days – the last day did feel a bit like treading water as it was quite spread out.

Wasn’t overly impressed by the facilities at QA-IQ’s Roseberry Ave – could do with a lick of paint, some better lighting and A/C that works properly. In all fairness it did look like they were in the middle of refitting it. More importantly the instructor was good and the kit/resources worked as required – no free lunch though 😉

No VCP exam voucher is included with QA-IQ as you get at DNS arrow – considering the QA and DNS courses are virtually the same price I think that’s a bit cheap – so you may want to check with your vendor before booking.

There is a lot of team working in building up DRS clusters and doing HA etc. and you have to have sat the course in order to be officially certified as a VCP.

I sat my exam yesterday morning and passed – not by as much as I’d have liked, but I was a bit lazy and didn’t do much (any) specific exam prep – there were a whole load of questions on a particular subject that I had not revisited since the course* and I fell foul of the “mark for review” option where you can go back at the end and can review/change your answers before submitting the exam – several of them from correct to incorrect as I later worked out – d’oh I learnt (and subsequently) forgot that from my MCSE exam days – if you don’t know 100% your 1st instinct was probably right.

Ah well, another one down – must get round to updating my MCSE too.. I quite like the certification exams, it’s just finding the time to learn the MS/VMWare answers and I’m lucky that English is my 1st language as I think a lot of the cert questions (not just VMWare – MS, Cisco etc.) are more about English comprehension and understanding what they are actually asking in order to answer correctly.

*As usual I had to sign an NDA, so no I can’t say what they were – sorry

Virtualization – the key to delivering "cloud based architecture" NOW.

There is a lot of talk about delivering cloud or elastic computing platforms, a lot of CxO’s are taking this all in and nodding enthusiastically, they can see the benefits.. so make it happen!….yesterday.

Moving your services to the cloud, isn’t always about giving your apps and data to Google, Amazon or Microsoft.

You can build your own cloud, and be choosy about what you give to others. building your own cloud makes a lot of sense, it’s not always cheap but its the kind of thing you can scale up (or down..) with a bit of up-front investment, in this article I’ll look at some of the practical; and more infrastructure focused ways in which you can do so.

Your “cloud platform” is essentially an internal shared services system where you can actually and practically implement a “platform” team that operates and capacity plans for the cloud platform; they manage it’s availability and maintenance day-day and expansion/contraction.

You then have a number of “service/application” teams that subscribe to services provided by your cloud platform team… they are essentially developers/support teams that manage individual applications or services (for example payroll or SAP, web sites etc.), business units and stakeholders etc.

Using the technology we discuss here you can delegate control to them over most aspects of the service they maintian – full access to app servers etc. and an interface (human or automated) to raise issues with the platform team or log change requests.

I’ve seen many attempts to implement this in the physical/old world and it just ends in tears as it builds a high level of expectation that the server/infrastructure team must be able to respond very quickly to the end-“customer” the customer/supplier relationship is very different… regardless of what OLA/SLA you put in place.

However the reality of traditional infrastructure is that the platform team can’t usually react as quick as the service/application teams need/want/expect because they need to have an engineer on-site, wait for an order and a delivery, a network provisioning order etc. etc (although banks do seems to have this down quite well, it’s still a delay.. and time is money, etc.)

Virtualization and some of the technology we discuss here enable the platform team to keep one step ahead of the service/application teams by allowing them to do proper capacity planning and maintain a pragmatic headroom of capacity and make their lives easier by consolidating the physical estate they manage. This extra headroom capacity can be quickly back-filled when it’s taken up by adopting a modular hardware architecture to keep ahead of the next requirement.

Traditional infrastructure = OS/App Installations

- 1 server per ‘workload’

- Silo’d servers for support

- Individually underused on average = overall wastage

- No easy way to move workload about

- Change = slow, person in DC, unplug, uninstall, move reinstall etc.

- HP/Dell/Sun Rack Mount Servers

- Cat 6 Cables, Racks and structured cabling

The ideal is to have an OS/app stack that can have workloads moved from host A to host B; this is a nice idea but there are a whole heap of dependencies with the typlical applications of today (IIS/apache + scripts, RoR, SQL DB, custom .net applications). Most big/important line of business apps are monolithic and today make this hard. Ever tried to move a SQL installation from OLD-SERVER-A to SHINY-NEW-SERVER-B? exactly. *NIX better at this, but not that much better.. downtime required or complicated fail over.

This can all be done today, virtualization is the key to doing it – makes it easy to move a workload from a to b we don’t care about the OS/hardware integration – we standardise/abstract/virtualize it and that allows us to quickly move it – it’s just a file and a bunch of configuration information in a text file… no obscure array controller firmware to extract data from or outdated NIC/video drivers to worry about.

Combine this with server (blade) hardware, modern VLAN/L3 switches with trunked connections, and virtualised firewalls then you have a very compelling solution that is not only quick to change, but makes more efficient use of the hardware you’ve purchased… so each KW/hr you consume brings more return, not less as you expand.

Now, move this forward and change the hardware for something much more commodity/standardised

Requirement: Fast, Scalable shared storage, filexible allocation of disk space and ability to de-duplicate data, reduce overhead etc, thin provisioning.

Solution: SAN Storage, EMC Clariion, HP-EVA, Sun StorageTek, iSCSI for lower requirements, or storage over single Ethernet fabric – NetApp/Equalogic

Requirement: Requirement Common chassis and server modules for quick, easy rip and replace and efficient power/cooling.

Solution: HP/Sun/Dell Blades

Requirement: quick change of network configurations, cross connects, increase & decrease bandwidth

Solution: Cisco switching, trunked interconnects, 10Gb/bonded 1GbE, VLAN isolation, quick change enabled as beyond initial installation there are fewer requirements to send an engineer to plug something in or move it, Checkpoint VSX firewalls to allow delegated firewall configurations or to allow multiple autonomous business units (or customers) to operate from a shared, high bandwidth platform.

Requirement: Ability to load balance and consolidate individual server workloads

Solution: VMWare Infrastructure 3 + management toolset (SCOM, Virtual Centre, Custom you-specific integrations using API/SDK etc.)

Requirement: Delegated control of systems to allow autonomy to teams, but within a controlled/auditable framework

Solution: Normal OS/app security delegation, Active Directory, NIS etc. Virtual Center, Checkpoint VSX, custom change request workflow and automation systems which are plugged into platform API/SDK’s etc.

the following diagram is my reference architecture for how I see these cloud platforms hanging together

As ever more services move into the “cloud” or the “mesh” then integrating them becomes simpler, you have less of a focus on the platform that runs it – and just build what you need to operate your business etc.

In future maybe you’ll be able to use the public cloud services like Amazon AWS to integrate with your own internal cloud, allowing you to retain the important internal company data but take advantage of external, utility computing as required, on demand etc.

I don’t think we’ll ever get to.. (or want) to be 100% in a public cloud, but this private/internal cloud allows an organisation to retain it’s own internal agility and data ownership.

I hope this post has demonstrated that whilst, architecturally “cloud” computing sounds a bit out-there, you can practically implement it now by adopting this approach for the underlying infrastructure for your current application landscape.

VMWare/Cisco Switching Integration

As noted here there is a doc that has been jointly produced between VMWare and Cisco which has all the details required for integrating VI virtual switches with physical switching.

Especially handy if you need to work with networking teams to make sure things are configured correctly to allow failover properly between redundant switches/fabrics etc. – it’s not as simple as it looks, and people often forget the switch-side configurations that are required.

Doc available here (c.3Mb PDF)

Free SAN for your Home/Work ESX Lab

VM/Etc have posted an excellent article about a free iSCSI SAN VM appliance that you can download from Xtravirt

it uses replication between 2 ESX hosts to allow you to configure DRS/HA etc.

Excellent, I’m going to procure another cheap ESX host in the next couple of weeks so will post back on my experiences with setting this up, my previous plan meant I’d have to get a 3rd box to run an iSCSI server like OpenFiler to enable this functionality, but I really like this approach.

Sidenote – Xtravirt also have some other useful downloads like Viso templates and an ESX deployment appliance available here