Virtualization, Cloud, Infrastructure and all that stuff in-between

My ramblings on the stuff that holds it all together

vT.A.R.D.I.S – 10 ESXi node cluster on a trolley as demonstrated at London VMUG

I recently presented a session at the London VMware User Group meeting about home labs, this post is a follow-up with the slides I used and some more details on the configuration.

The kit I demo’d has affectionately been named the the vT.A.R.D.I.S which stands for Trolley Attached Random Datacentre of Inexpensive Servers 🙂 or Hernia-maker – don’t feel like you actually have to strap yours to a trolley though 🙂

This is part of a series of joint postings with my esteemed colleague Mr.Techhead, my sections of the series concentrate on the details of building a virtualized ESX cluster using the vSphere 4 for learning and test & development; Techhead’s posts will focus on the best low-cost hardware to use and specific configuration steps and I will cover some of the configurations and use cases.

You may be wondering why you would want to do this? well, if you are studying for your VCP or developing scripts or utilities for managing vSphere environments you rarely have a multi-node cluster at your disposal to test against because by it’s very nature it requires a lot of {usually expensive} hardware and you miss the more advanced configurations like HA/DRS/FT that this type of environment can use.

Also consider the larger production-type environment where you want to test some automatic deployment or management scripts and tools – this is an ideal approach which uses minimal hardware to conduct the 1st stages of test and development – if you’re an ITIL shop this is release management. Even the best equipped test labs won’t give you more than a couple of hosts to play with – this virtualized ESX approach means you can have many more ESX hosts to test against without busting the bank.

So we have put our heads together and have come up with what we think is the lowest possible cost way to build such an environment, and unsurprisingly it makes heavy use of virtualization – to allow you to study and work on without

- Being too noisy to leave switched on

- drawing too much {expensive} power

- costing the earth

The catch: Now, of course nothing is for free so to build this it will cost you some money, but it will be a lot less than your typical production environment and more into the hobbyist market – of course you get what you pay for, and I wouldn’t be going into this with the expectation that this will perform well enough for you to compete with EC2 🙂 but for your own general home use; and probably that of an SoHo/SME type organisation it’s ideal.

The photo below shows the demo kit we used for the London meeting cunningly strapped to a B&Q trolley for “portability” 🙂

To break it down into each major functional area and as a taster of the follow-up posts here are some of the things you need to consider..

Storage

Shared storage is a requirement for HA/DRS/FT and is usually the most expensive part in a production environment which would typically be Fibre Channel and SCSI disk SAN storage, you’ll never get this on our budget so we have taken the OpenSource and iSCSI and SATA approach, we have put this through its paces for the last 2 years in varying topologies and it performs very well and will more than service your own personal/study needs, it also has the advantage that it can probably be recycled from that pile of spare PC parts you have in the cupboard.

There are also a number of low-cost NAS devices which should be within your budget if you don’t; Techhead has a number of posts on the way around this.

Network

Building flat networks is easy – you just need a dumb switch, or even a hub and away you go; but by doing this you miss the subtle configuration problems you need to understand to do things properly in a production environment, so ideally you need something that will support VLANs and routing – you also need Gigabit ports for vSphere; although I have had vMotion working on a 100Mb switch in the past.

We have looked for a long time but there are no cheap (<£400) Gigabit switches even if you go 2nd hand.

There are numerous low-end switches that support VLANs, but can’t do the routing between VLANs so you either need an external hardware router like a Cisco 2600 or something else..

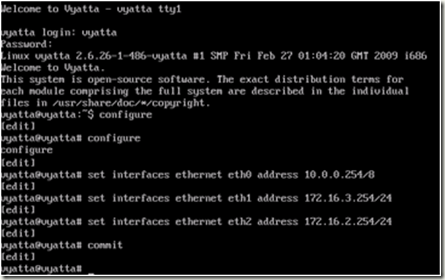

So, a compromise is needed – we opt for a low-cost Gigabit switch with VLAN support like the 8-port Linksys SLMxxx and compliment it with a virtual machine running the Vyatta community edition virtual appliance which can provide the L3 routing betweenn your VLANs (a sample of how easy to configure it is shown below)..

Server

Techhead is an avid HP-fan; and rightly so as they make great production kit but I had never really explored the lower-ends of their range such as the ML110 and ML115 range – these are single CPU socket servers with internal (non hot-swap) SATA storage, whilst they don;t have on-board redundant hardware they are quiet and more importantly – surprisingly cheap and fully ESX 3/4 compatible.

Techhead has some good deals on the ML115 G5 hardware at this link, here and here and best of all the ML115 G5 is compatible with the new Fault Tolerance feature of vSphere

if you wonder what is inside an ML115 server read this link

Hypervisor & Nested Hypervisor VM

VMware ESXi is my current weapon of choice for this environment and so will be the focus of this series of posts; unfortunately I’ve not found a way to run nested Hyper-V or Xen Virtual Machines, that would be the ultimate in flexible learning platform – unless anyone out there knows how to?

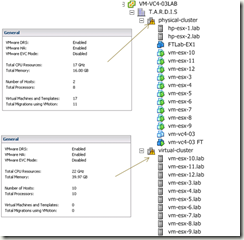

I make heavy use of the new Fault Tolerance feature of vSphere to protect the vCenter and Vyatta virtual machines in this environment.

It’s the ideal setup to test unattended deployments of ESX hosts as well as you can just delete them and start again.

Virtualized ESX Hosts – 10 ESXi hosts running on 2 physical machines

Detailed Posts Index

Rather than do one long post we have a series of break-out posts on the specific areas of this topic.

this is the list of topics to come; when articles are posted the links will be populated and become clickable.

Part 1 – Lab Hardware Overview (coming soon @Techhead)

Part 2 – Lab Hardware Configuration (coming soon @Techhead)

Part 3 – ESXi Installation & Configuration (coming soon @Techhead)

Part 4 – Shared Storage Installation & Configuration (coming soon @Techhead)

Part 5 – Networking Configuration (VLAN’ing & Jumbo Frames) (coming soon @Techhead)

Part 6 – VM’d ESXi (Coming soon @vinf.net)

Part 7 – VM’d vCenter; auto start-up of VMs (Coming soon @vinf.net)

Part 8 – VM’d FT and FT’ing vCenter VMs (Coming soon @vinf.net)

Part 9 – FT on the ML115 series – benchmarking with some Exchange VMs (Coming soon @vinf.net)

Part 10 – VM’d Lab Manager farm environment on a pair of ML’s (VM’d ESXi) (Coming soon @vinf.net)

Part 11 – VM’d View 4 farm environment on a pair on ML’s (VM’d ESXi) (Coming soon @vinf.net)

Part 12 – Home backup – VMware data recovery / fastSCP/Veeam backup or something else low-cost with USB drives/etc. (Coming soon – joint posting)

The slides from my original VMUG presentation are available online at this link

Post fixed with a couple of stupid typos and URL for slide download now goes direct to the .PPT

apologies, need more sleep!

Looking forward to the breakout articles, in particular the shared storage.

Keep up the good work!

I’m building my vSphere home lab now and have a quick question. On which machine do you install the vCenter server? The ESX4 obviously installs on the ML115’s, but do you have another machine, such as your laptop or a separate workstation for the vCenter server’s role? If so, can it be a normal workstation, or does it require the hefty CPU and RAM that the ML115’s have?

Thanks!

Jeff

Jeff – I just run VC as a virtual machine on the same host – or when I have more than 1 node in the cluster as a DRS-enabled VM; works well for me – there is no dependency on VC for HA to function so it works well enough (or use FT to protect the VC VM) – just be a bit careful what you use as a DNS server on each ESX host or use host files.

Thanks for the reply! When you say node in the cluster, are you referring to multiple ESX servers? I will have two physical ESX servers in my setup initially, with adding two more within a few months.

Will I configure a vCenter image on just one of the ESX servers that will manage all 4, or is the DRS-enabled vCenter image like a cluster of 4 vCenter images?

I’m sorry if I’m not wording this well…thanks for the help!

Jeff

Hi Jeff,

I have 2 or more physical ESX nodes in a DRS enabled cluster, in my lab configs I just have a single VM running vCenter – which manages the 2 (or more) physical ESX nodes – single VM and vCenter instance but via vMotion and DRS it could run on either physical node (and be HA’d should one fail) – or if you have compatible h/w (like the AMD ML115g5) then you can use the FT feature (..assuming valid ESX licenses) to have the VM run live on both physical hosts – instant VM level failover if a host fails.

Also worth bearing in mind you need shared storage for the DRS/vMotion/HA/FT functionality

you can cluster together vCenter instances at the application level using vCenter heartbeat, but I’ve not used it myself (think of it as something like the Doubletake product, but for vCenter)

Vinf,

I finally understand it now…thank you for that very detailed answer. I will have shared storage (Iomega StorCenter ix2-200 2TB), so I should be good to go there.

My ESX servers are on the compatibility list (Dell PowerEdge 1850’s with 12GB of RAM and PERC 4e/Si w/256MB BBWC. Considering the total cost of the servers and RAM, these were the cheapest I could find, so I’m looking forward to adding more as soon as I can.

As a final question, can you give any advice on the cheapest route to go for the required licenses? Please let me know if this is an inappropriate question, but I purchased Ghost and had planned on installing the trial versions of the software (again, study lab only) and capture a ghost image of each of the ESX servers. Since I’ll use shared storage for all data, I should be able to reimage these when needed, correct? Is there a better way to go about this?

thanks again! this is an awesome thread and I’ve enjoyed reading thru your site.

Jeff

No probs, glad you found it useful.

licenses are troublesome – and will be the subject of a dedicated post in the series.

the short answer is, out of the box you get a 60-day eval for the enterprise level features.. if you don’t have access to NFR licenses then you’re stuck and will have to reinstall every 60 days and apply for a new trial key – it’s hard to do it legally if you want to play around with the enterprise level features –

Microsoft have a nicer system for home/lab/test licensing with the MSDN and TechNet subscriptions, but I guess MS do suffer some abuse of these licenses by people using them for production rather than what they were intended for (home/test/dev) – but they are big enough to absorb it; maybe VMware will look to doing a similar thing (fingers crossed)

In your case I don’t think Ghost would work as eval time-bombs will be tied to the system clock.

Pingback: Podcast 79 – Building your homelab » Yellow Bricks

Pingback: White boxes and home labs – Community Podcast #79 | VMvisor

Pingback: VMpros: My Homelab | VMpros.nl

I run nested XenServer 5.x VMs under ESX 4.0U1A and and then run Linux 32bit VMs under those nested XenServer 5.x servers (Windows VMs under the nested XenServer 5.x VM won’t run because the AMD-V and Intel VT instructions from the physical CPU apparently can’t be passed to more than one VM deep in the nesting structure). Your physical CPU must be AMD-V or Intel VT capable and have that engaged in the BIOS. Choose “Other 64 bit” for the XenServer 5.x O/S type under ESX 4.0 VM configuration. I have to run the XenServer 5.x VM locally on the ESX host local hard drive and the nested Linux VM running on the XenServer 5.x VM also locally because my shared storage is a bit weak. If you have Fibre or professional grade iSCSI it may not require the XenServer VM to run locally on the ESX host. XenCenter sees the XenServer VMs just like they’re physical servers.

The physical ESX 4.0 hosts that run the nested XenServer 5.x VMs also have a mix of nested ESX 4.0 VMs that run 32 bit Windows VMs and 32 bit Linux VMs.

I haven’t looked at nested Hyper-V VMs running under ESX 4.0.

Datto

I just ran a quick test and installed a nested XenServer 5.5 server on a physical ESX 4.0 server that doesn’t have AMD-V or Intel VT capability and then started up a Linux VM on the nested XenServer 5.5.

So XenServer doesn’t require AMD-V nor Intel VT to run nested under ESX 4.0 and have Linux VMs running on it. CPU for the test was a single-core AMD Athlon 64 3500 so everything does run rather slowly but it was just a test to see if AMD-V/VT was required.

Windows VMs are still out of the question on nested XenServer VMs though.

Datto

It looks like, from a not-so-quick test yesterday, a nested Hyper-V Server R1 and R2 will run under ESX 4.0U1A with some tweaks to the Hyper-V Server .vmx file but you can’t get nested VMs to run on the nested Hyper-V Server VMs. The nested Hyper-V Servers will show up in Hyper-V Manager as usual but there’s no way to get nested VMs to run on the Hyper-V Server VMs.

Datto

Datto, can you share the .vmx hacks to get Hyper-V running? very interesting, even if nested VM’s are not possible.

Pingback: Home Lab « Virtualised Reality

Pingback: Building your own VMware vSphere lab – A step by step guide. Part 1 – Lab Overview | TechHead.co.uk

Pingback: VMware ESX(i) Home Lab – Why, What and How? Considerations when building your own home lab. | TechHead.co.uk

Pingback: VMware Express – The Challenge – Mark Vaughn's Weblog

Pingback: VMpros: My Homelab

Pingback: Welcome to vSphere-land! » Home Lab Links

Pingback: Server Room in Shipping container

Pingback: VMware ESX(i) Home Lab - Why, What and How? Considerations when building your own home lab. - TechHead

Pingback: Building your own VMware vSphere lab – A step by step guide. Part 1 – Lab Overview - TechHead