Virtualization, Cloud, Infrastructure and all that stuff in-between

My ramblings on the stuff that holds it all together

Comparing the I/O Performance of 2 or more Virtual Machines SSD, SATA & IOmeter

I’m currently doing some work on SSD storage and virtual machines so I need an easy way of comparing I/O performance between a couple of virtual machines, each backed onto different types of storage.

I normally use IOmeter for this kind of work but generally only in a standalone manner – i.e I can run IOmeter inside a single VM guest and get statistics on the console etc.

With a bit of a read of the manual I quickly realised IOmeter was capable of so much more! (amazing things, manuals :)).

Note: You should download IOmeter from this link at SourceForge and not this link to iometer.org; which seems to be an older non-maintained build

You can run a central console which runs the IOmeter Windows GUI application then add any number of “managers” – which are machines doing the actual benchmarking activities (disk thrashing etc.)

The use of the term manager is a bit confusing to me as you would think the “manager” is the machine running the IOmeter console, but actually each VM or physical server you want to load-test is a known as a manager, which in turn runs a number of workers which carry out the I/O tasks you specify and reports the results back to a central console (the IOmeter GUI application shown above).

Each VM that you want to test runs the dynamo.exe command with some switches to point it at an appropriate IOmeter console to report results.

For reference:

On the logging machine run IOmeter.exe

on each VM (or indeed physical machine) that you want to benchmark at the same time run the dynamo.exe command with the following switches

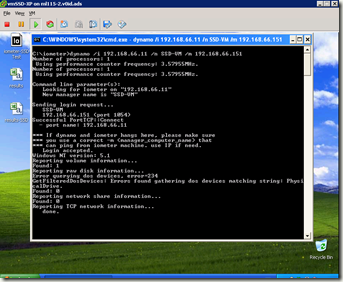

dynamo.exe /i <IP of machine running IOmeter.exe> /n <display name of this machine – can be anything> /m <IP address or hostname of this machine>

in my case;

dynamo.exe /i 192.168.66.11 /n SATA-VM /m 192.168.66.153

You will then see output similar to the following;

The IOmeter console will now show all the managers you have logged on – in my case I have one VM backed to a SATA disk and one VM backed to an SSD disk.

I can now assign some disk targets and access specifications to each worker and hit start to make it “do stuff which I can measure” 🙂 for more info on how to do this see the rather comprehensive IOmeter manual

If you want to watch in realtime, click the results display tab and move the update frequency slider to as few seconds as possible

If you want to compare figures from multiple managers (VMs) against each other you can just drag and drop them on to the results tab

Then chose the metric you want to compare from the boxes – which don’t look like normal drop down elements so you probably didn’t notice them.

You can now compare the throughput of both machines in real-time next to each other – in this instance the SSD backed VM achieves less throughput than the SATA drive backed VM (more on this consumer-grade SSD in a later post)

Depending on the options you chose when starting the test run the results may have been logged out to a CSV file for later analysis.

Hope that helps get you going – if you want to use this approach to benchmark your storage array with a standard set of representative IOmeter loads – see these VMware communities threads

http://communities.vmware.com/thread/197844

http://communities.vmware.com/thread/73745

from a quick scan of the thread, this file seems to be the baseline everyone is measuring against

http://www.mez.co.uk/OpenPerformanceTest.icf

To use the above file you need to open it with IOmeter, then start up your VMs that you want to benchmark as described earlier in this post.

You will need to manually assign the disk target to each worker once you have opened that .icf file in IOmeter unless you set them in the .icf file manually.

This is the test whilst running with the display adjusted to show interesting figures – note the standard test contains a number of different iterations and access profiles – this is just showing averages since the start of the test and are not final figures.

This screenshot shows the final results of the run, and the verdict is; overall consumer-grade SSD sucks when compared against a single 7.2k RPM 1Tb SATA drive plugged into an OpenFiler 🙂 I still have some analysis to do on that one – and it’s not quite that simple as there are a number of different tests run as part of the sequence some of which are better suited to SSD’s

More posts on this to follow on SSD & SATA performance for your lab in the coming weeks, stay tuned..

Where SSD will shine is small (4K-8K) random IO – ergo look for workloads that drive tiny bandwidth (MBps) and massive throughput (IOps).

Where SATA will shine is large (256K+) sequential IO – ergo look for workloads that drive massive bandwidth (MBps) and tiny throughput (IOps).

Chad

Hey Chad, thanks for that; doing some research into this so that’s useful

that’s one of the reasons for not selecting SSD in my new desktop….

Pingback: So you bought an equallogic san, now what…. part three « Michael Ellerbeck

Great post, going to play with new IX4-200D today 🙂

Pingback: It’s voting time.. « Virtualization, Windows, Infrastructure and all that “stuff” in-between

It’s very hard to believe that a good sata drive will outperform a good SSD at anything, and I don’t think you have proven that here – we must know the details of the drive you were using.

Are you meaning to say that a bad or old model SSD is not as good as SATA for VMs? That’s easy to believe.

It would be great to see results for Intel’s G2 SSD, or any Barefoot controller based drive.

SSD drives older than the ones I mention don’t represent the current state of the SSD market, which changes very quickly.