Virtualization, Cloud, Infrastructure and all that stuff in-between

My ramblings on the stuff that holds it all together

Category Archives: vTARDIS

Using Magic Whiteboard paper to document your home lab

yes, I have a rack in my home lab… they are usually free and easy to come-by if you have the means to transport them and I’ve had this one for a number of years – it has basic shelves inside and all my kit sits inside it reasonably tidily.

Here is a quick tip, get some magic whiteboard paper – like this – this stuff is great and it sticks to pretty much everything using static, no messy adhesive etc.

If you have a spare wall, or in my case a spare side of a rack – it works very well if you stick it to the side of the rack as shown below (the magnets shown in the pic aren’t actually required – it stays on all by itself).

Leave some whiteboard pens nearby and you’ll find yourself actually updating even a basic form of documentation for your home lab regularly.

Leave some whiteboard pens nearby and you’ll find yourself actually updating even a basic form of documentation for your home lab regularly.

HP ML115 G5 fans noisy following firmware upgrade

As part of my UK VMUG tour preparation I am rebuilding the vTARDIS to the GA build of vSphere, part of this required updating the firmware of my ML115 G5 server.

You can download the latest BIOS upgrade here (I had to use the USB key method) as I don’t have a compatible OS installed to allow the online ROM flash process.

Now if you do just this the fans will stay at 100% and it’s very noisy!

To fix this you need to install the following BMC (Baseboard Management Controller) upgrade

Upgrade BMC firmware

And that should sort it out

vTARDIS 5 at a VMUG near you…

I am pleased to announce that I will be giving a session on my updated vTARDIS 5 home lab environment at two upcoming VMUGs in the UK (for more background vTARDIS 5 page here)

Dates and links for registration as follows if you want to come an see it in action, I’ll be doing plenty of demos and Q&A and minimal PowerPoint

11th October – Virtual Machine User Group, Mint Hotel, Leeds

Virtualisation user group with a multi-vendor slant, presentations from Andrew Fryer (Microsoft), Veeam and a number of independent consultants and community members

3rd November UK National VMware User Group, National Motorcycle Museum, Solihull

With sessions from Duncan Epping & Frank Denneman, Joe Baguley (VMware) , a number of independent consultants, community members, vendor sponsors

VCDX Peer defence panel – a 1st for VMware User Groups, dry-run your VCDX defense presentation in front of a group of peers; maybe also including some former real VCDX panellists.

see this post for more information and drop me an email (details on about page) to register your interest.

Hope to see you there, if you’re interested in a repeat of these sessions at your own local VMUG drop me a line, have case can travel (in the past it’s been to BriForum Chicago and VMworld SF) ![]()

vTARDIS.next runs on vSphere 5.0

I have been working furiously on an updated version of the vTARDIS based around vSphere 5, and now it’s in the open (I was on the heavily NDA’d beta programme) the goal is the same: lowest cost possible physical hardware, but the ability to build complex production like ESX environments and run large amounts of virtual ESX hypervisors and guests.

This time round, and due to some VERY cool [UNSUPPORTED] features of ESX 5 you can run more than just clusters of nested ESX virtual machines as I did in the original vTARDIS, but you can also run all the following on a SINGLE physical server running vSphere 5 – this is seriously cool IMHO

- Run 64-bit guests inside an ESX virtual machine (you couldn’t do this previously as it didn’t pass through the x64 extensions)

- Run HyperV under ESX as a virtual machine,

and allow it to run nested guests*and even build clusters of Hyper V servers - Run XenServers under ESX as a virtual machine

and allow it to run nested guests –to be tested - Fully stateless deployment of ESX guests using my former colleague’s amazing PXE Manager so no need to configure lots of hosts

- Run large vSphere clusters

- Multiple Virtual Storage Appliances

*I have had problems with the guests inside virtual Hyper-V nodes, more on this soon as I think it was just a config problem

Hardware

In the past I used the HP ML1xx range of PC-servers, they’re great bits of kit but I was starting to run into challenges with the 8Gb RAM limit, so I have branched out a bit and gone for an HP DL380 G5 – yes it’s not quiet enough to put under your bed 😉 but they are reasonably cheap 2nd hand on eBay and more importantly they take up to 32Gb of RAM. and in day to day use it’s not too noisy

Total acquisition cost of this server as follows (all from eBay and out of my own pocket)

| HP DL380 G5, 2 x dual core Xeon 2.0GHz, 8Gb RAM, 2 x 72Gb SAS disks, P400 array controller, redundant PSU, iLo advanced license. | £550 (Refurbished) |

| 6 x 72Gb 10k RPM SAS HP Disks | £270 (Refurbished) |

| 32Gb RAM | £520 (!) ouch new. |

| Sold supplied 8Gb RAM kit from server | -£150 |

| Total Cost | £1,190 |

This box has a good pedigree as you’ll see because it’s former owners didn’t erase the iLo settings before they decommissioned it (oops).

Whilst it’s noisy as hell, it seems surprisingly frugal when it comes to power consumption with approx 50% CPU load on the physical host

Storage

Local DAS array of 8 x 72Gb SFF hot-plug HP drives, I’m also backing them up to my Iomega NAS using Veeam Backup and Replication 5.

Hypervisor

I did this work with the beta and Release Candidate (RC) builds of ESX and vCenter, I used the Windows vCenter installation rather than the appliance.

Next… The iTARDIS* more soon!

*Don’t try this at home.. you won’t be able to make it work (yet), there are some firmware issues that stop it working on a Mac Mini – but I had access to some internal resources whilst working at VMware to get around it

Stay tuned for step-by-step instructions

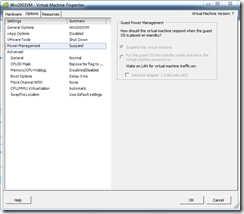

vSphere Host cannot enter Standby mode using DPM and WoL

I encountered this error in my lab recently, I previously wrote about how I was able to use DPM in my home lab, I’ve recently re-built it into a different configuration but found that I was no longer able to use just Wake on LAN (WoL) to put idle cluster hosts into standby.

I got the following error, vCenter has determined that it cannot resume host from standby.

Confirm that IPMI/iLO is correctly configured or, configure vCenter to use Wake-On-LAN.

1st thing to note is that there is nowhere in vCenter to actually configure Wake-on-LAN, however you can check to see which NICs in your system support Wake On LAN (not all do, and not all have WoL-enabled vSphere drivers)

My ML115 G5 host has the following NICs

- Broadcom NC380T dual port PCI Express card – which does not work with Wake on LAN

- Broadcom NC105i PCIe on-board NIC which does support WoL.

In my current configuration the onboard NIC is connected to a dvSwitch and is used for the management (vmk) interface

This seems to be the cause of the problem because if I move the vmk interface (management NIC) out of the dvSwitch and configure it to use a normal dvSwitch vSwitch DPM works correctly.

In a production environment a real iLO/IPMI NIC is the way to go as there are many situations that could make WoL unreliable. However, if you want to use DPM and don’t have a proper iLo you need to rely on Wake-On-LAN so you need to consider the following..

- you need a supported NIC

- you need a management interface on a standard vSwitch, connected to a supported NIC

Also, worth noting that if you are attempting to build a vTARDIS with nested ESXi hypervisors you cannot use DPM within any of your nested ESXi nodes, the virtual NICs (e1000) do support WoL with some other guest-types like Windows, so your VM guests can respond to WoL packets (via the following UI screens)

However the e1000 driver that ships with vSphere ESX/ESXi does not seem to implement the WoL functionality so you wont be able to use DPM to put your vESXi guests to sleep – kind of an edge-case bit of functionality and ESX isn’t an officially supported guest OS within vSphere so it’s not that surprising.

Home Labbers beware of using Western Digital SATA HDDs with a RAID Controller

I recently came across a post on my favourite car forum (pistonheads.com) asking about the best home NAS solution – original link here.

What I found interesting was a link to a page on the Western Digital support site stating that desktop versions of their hard drives should not be used in a RAID configuration as it could result in the drive being marked as failed.

Now, this I far from the best written or comprehensive technote I have ever read however I wasn’t aware of this limitation, it appears that desktop (read: cheap) versions of their drives have a different data recovery mechanism to enterprise (read: more expensive) drives that could result in an entire drive being marked as bad in a hardware RAID array – the technote is here and pasted below;

What is the difference between Desktop edition and RAID (Enterprise) edition hard drives?

Answer ID 1397 | Published 11/10/2005 08:03 AM | Updated 01/28/2011 10:00 AMWestern Digital manufactures desktop edition hard drives and RAID Edition hard drives. Each type of hard drive is designed to work specifically as a stand-alone drive, or in a multi-drive RAID environment.

If you install and use a desktop edition hard drive connected to a RAID controller, the drive may not work correctly. This is caused by the normal error recovery procedure that a desktop edition hard drive uses.

Note: There are a few cases where the manufacturer of the RAID controller have designed their drives to work with specific model Desktop drives. If this is the case you would need to contact the manufacturer of that controller for any support on that drive while it is used in a RAID environment.

When an error is found on a desktop edition hard drive, the drive will enter into a deep recovery cycle to attempt to repair the error, recover the data from the problematic area, and then reallocate a dedicated area to replace the problematic area. This process can take up to 2 minutes depending on the severity of the issue. Most RAID controllers allow a very short amount of time for a hard drive to recover from an error. If a hard drive takes too long to complete this process, the drive will be dropped from the RAID array. Most RAID controllers allow from 7 to 15 seconds for error recovery before dropping a hard drive from an array. Western Digital does not recommend installing desktop edition hard drives in an enterprise environment (on a RAID controller).

Western Digital RAID edition hard drives have a feature called TLER (Time Limited Error Recovery) which stops the hard drive from entering into a deep recovery cycle. The hard drive will only spend 7 seconds to attempt to recover. This means that the hard drive will not be dropped from a RAID array. While TLER is designed for RAID environments, a drive with TLER enabled will work with no performance decrease when used in non-RAID environments.

There are even reports of people saying WD had refused warranty claims because they discovered their drives had been used in such a way, which isn’t nice.

This is an important consideration if you are looking to build or are using a NAS for your home lab like a Synology or QNap with WD HDDs or maybe this even extends to a software NAS solution like freeNAS, OpenFiler or Nexentastor

It’s also unclear if this is just a Western-Digital specific issue or exists with other drive manufacturers.

Maybe someone with deeper knowledge can offer some insight in the comments, but I thought I would bring it to the attention of the community – these are the sort of issues are like the ones I was talking about in this post but, as with everything in life – you get what you pay for!

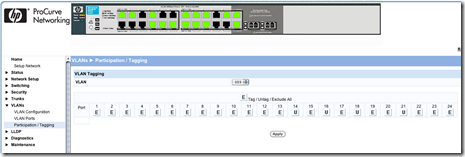

How to Configure a Port Based VLAN on an HP Procurve 1810G Switch

I have a new switch for my home lab as I was struggling with port count and I managed to get a good deal on eBay for a 24-port version – it’s also fan-less so totally silent which is nice as it lives in my home office.

I am re-building my home lab again (I’m not sure I ever finish a build before I find something new to try, but anyway – I digress) now I have 3 NICs in my hosts I want a dedicated iSCSI network using a VLAN on my switch.

My NAS(es) are physical devices and I want to map one NIC from each ESX host into an isolated VLAN for iSCSI/NFS traffic, this means nominating a physical switch port to just be part of a single VLAN (103) and take it out of the native VLAN (1) – Cisco call this an access port and other switches call it a Port Based VLAN (PVLAN) – this is the desired configuration

The configuration steps weren’t so intuitive on this switch so I have documented it here;

- 1st create a VLAN – in my case I’m using 103 which will be for iSCSI/NFS

- You need to check the “create VLAN” box and type in the VLAN number

- press Apply

- Check the set name box next to the VLAN you created

- type in a description

- click apply

Then go to VLANs—> Participation/Tagging

- You need to clear the native VLAN (1) from the ports you will be using

- select VLAN 1 from the drop down box

- click each port (in this case 13,14,15,16,17,18 and 21) until it goes from U to E (for Exclude)

- click apply (important!)

- Note 13,15,17 are used for my vMotion VLAN – but the principal is the same)

- select your VLAN from the drop down – in this case 103

- Now allocate each port to your storage VLAN by clicking on it until it turns to U (for Untagged)

- click apply (important!)

Now you should have those ports connected directly to VLAN 103 and they will only be able to communicate with each-other – easiest way to test this is to ping between hosts connected on this VLAN.

You can manually check you have done this correctly by looking at VLANs—>VLAN Ports

- Drop down the Interface box and choose a port that you have put into the PVLAN

- The read-only PVID field should say 103 (or whatever VLAN ID you chose) if it says 1 or something else check your config as it’s in the wrong VLAN.

You won’t be able to get into this VLAN from any other VLAN or the native VLAN (because we excluded VLAN 1 from these ports) if you want to be able to get into this VLAN you’ll need to dual home one of the hosts or add a layer 3 router, I unusually use a Vyatta virtual machine – post on this coming soon.

I’ll also be adding some trunk ports to carry guest network VLANS in a future post.

Nexentastor CE performance not as good as expected with SSD Cache check it is actually working

I encountered this problem in my lab – I have the following configuration physically installed on an HP Microserver for testing (I will probably put it into a VM later on however)

1 x 8Gb USB flash drive holding the boot OS

And the following configured into a single volume, accessed over NFSv3 (see this post for how to do that)

1 x 64Gb SSD Drive as a cache

4 x 160Gb 7.2k RPM SATA disks for a raid volume in a raidz1 configuration

A quick benchmark using IOmeter showed that it was being outperformed on all I/O types by my Iomega IX4-200d, which is odd, as my Nexentastor config should be using an SSD as cache for the volume making it faster than the IX4 So I decided to investigate.

If you look in data management/Data Sets and then click on your volume you can see how much I/O is going to each individual disk in the volume.

In my case the SSD c3d1 had no I/O at all – so if you click on the name of the volume shown in green (in my case it’s called “fast”) then you are shown the status of the physical disks.

So, from looking at the following screen Houston we have a problem – my SSD is showing as faulted (but no errors are recorded either) – so I need to investigate why (and hope it’s still under warranty, if it has actually failed this will be the 2nd time this SSD has been replaced!)

Attempts to manually online the disk return no error, but don’t work either so not entirely sure what happened there, I did have to shut down the box and move it so I re-seated all the connectors but it still wouldn’t let me re-enable the disk.

Worth noting that even with this fault the volume remained on-line; just without the cache enabled so I was able to storage vMotion off all the VMs and delete and re-create the volume (this time I re-created it without any mirroring for maximum performance.

Once I had storage vMotioned the test VM back (again, no downtime – good old ESX!) I ran some more Iometer tests and performance looked a lot better (see below)

I’ll be posting some proper benchmarks later on, but for now it was interesting to see how much better it could perform than my IX4 (although remember there is no data-protection/RAIDZ overhead so a disk fault will destroy this LUN – good enough for my home lab though, and I plan to RSYNC the contents off to the IX4 for a backup ).

Fingers crossed this isn’t a fault with my SSD… time will tell!

Nexentastor, When 1Gb just isn’t enough

I have been trying to get my Nexentastor SSD/SATA hybrid NAS working this last week and I’ve found that the web UI grinds to a halt sometimes for me, I couldn’t find a UNIX ‘top’ equivalent quickly but the diagnostic reports that you can generate from the setup menu command line did indicate that it was short of RAM.

The HP Micro server I am using shipped with 1Gb of RAM, and normally that would be fine for a file-server/NAS but I’m thinking that Nexentastor does a fair bit more and is based on OpenSolaris rather than a stripped down Linux or BSD; the eval guide says 768Mb is enough for testing, 2Gb better 4Gb ideal so I was already pushing my luck with 1Gb for any real use.

So, I bit the bullet and ordered 8Gb of RAM for the server, which is the maximum you can install – ironically this cost the same amount as I paid for the whole Microserver in the 1st place (after the cash-back deal) but that’s reflective of the fact it only has 2 memory slots so I had to opt for the more expensive 4Gb chips.

I went for 8Gb as at some point I will probably re-run my experiments under ESXi and deploy this host as a part of my management cluster for the vTARDIS.cloud.

I am also booting the OS from a USB flash-drive – I had several 2Gb units but it wouldn’t install to them as they didn’t have quite enough space, so I’m using an 8Gb flash drive to hold the OS – this isn’t the most performant drive either so any swapping will be further impacted by the USB speed.

I’m Pleased to report that the 8Gb RAM upgrade has resolved all the problems with navigating the UI, and should also yield further I/O performance as the Nexentastor software uses the extra RAM as extra cache (ARC) as well as the SSD (L2ARC) – there is a good explanation of that on this blog post.

I’m going to post up my I/O benchmarking when I have some further wrinkles ironed out – in the meantime there is an excellent post here with some example benchmarks running Nexentastor in a VM on a slightly more powerful HP ML110 server.

Building a Fast and Cheap NAS for your vSphere Home Lab with Nexentastor

My home lab is always expanding and evolving – no sooner have I started writing up the vTARDIS.cloud configuration than something shiny and new catches my eye! fear not I will be publishing the vTARDIS configuration notes over the next 2 months, however in the meantime I have noticed that my IX4-200d NAS has been bogging down performance a bit recently – I attribute this to the number of VMs I am running across the 5 physical (and up to 50) vESXi hosts.

The IX4 is great and very useful for protecting my photos and providing general media storage but I suspect it also uses 5.4k RPM disks and in a RAID5 configuration it performs /ok/ but I feel the need, the need for speed ![]()

With the sub-£100 HP MicroServer deal that is on at the moment I spotted an opportunity to combine it with some recycled hardware into a fast NAS box, using some new software – the NexentaStor Community Edition, I’ve used OpenFiler and the Celerra VSA a lot in the past but this has some pretty intriguing features.

Nexentastor allows you to use SSD as a cache and provides a type of software RAID using Sun’s ZFS technology – you can read a good guide to configuring it inside a VM on this excellent post

I already have a number of ML115 and ML110 servers, which all boot from 160Gb 7.2k RPM SATA disks; most of the time they do nothing so an idea was born, I will switch my home lab to boot from 2Gb USB sticks (of which I have a plentiful supply) and re-use those fast SATA disks in the HP MicroServer for shared, fast VM storage

I also have a spare 64Gb SSD from my orginal vTARDIS experiments which I am planning to re-use as the cache within the MicroServer

So, the configuration looks is like this;

Because I want maximum performance and I don’t care particularly about data protection for this NAS I’m just going to try striping data across all the SATA disks for best performance and I hope the SSD will provide a highly performant front-end cache for VMs stored in it (if I understand how it works correctly).

Most of the VMs it will be storing are disposable or easily re-buildable but I can configure RSYNC copies between it and my IX4 for anything I want to keep safe (or maybe just use one of the handy NFR licenses Veeam are giving out)

I did consider putting ESXi on the HP MicroServer and running Nexentastor as a VM (which is supported) but I haven’t yet put any more RAM in the MicroServer, although I may do this in future and add it to my existing management cluster.

I’ll post up some benchmarks when I’m done.